Drone Racing Competition

Overview

Autonomous drone racing represents an ideal testbed to benchmark the progress on autonomous aerial navigation: autonomous drones in a racing setting must be able to perceive, reason, plan, and act on tens of milliseconds of scale while minimizing the time to reach the goal. These requirements make autonomous drone racing an ideal benchmark that can help researchers to gauge progress on complex perception, planning, and control algorithms [1-5]. Recently, remarkable progress has been made in drone racing using Optimal Control (OC) and reinforcement learning (RL). Particularly, OC-based methods via time-optimal trajectory planning [4] and tracking or contouring control [3] have been proposed. On the other hand, RL can directly map observations to control command, forgoing the need for trajectory planning. However, vision-based [6] drone racing requires operating the vehicle on the edge of both its physical limits (high speeds and accelerations) and perceptual limits (limited field of view, motion blur, limited sensing range, and fast reaction times). Those requirements impose a significant challenge to existing methods.

Coming Soon!

The competition is coming soon.The competition

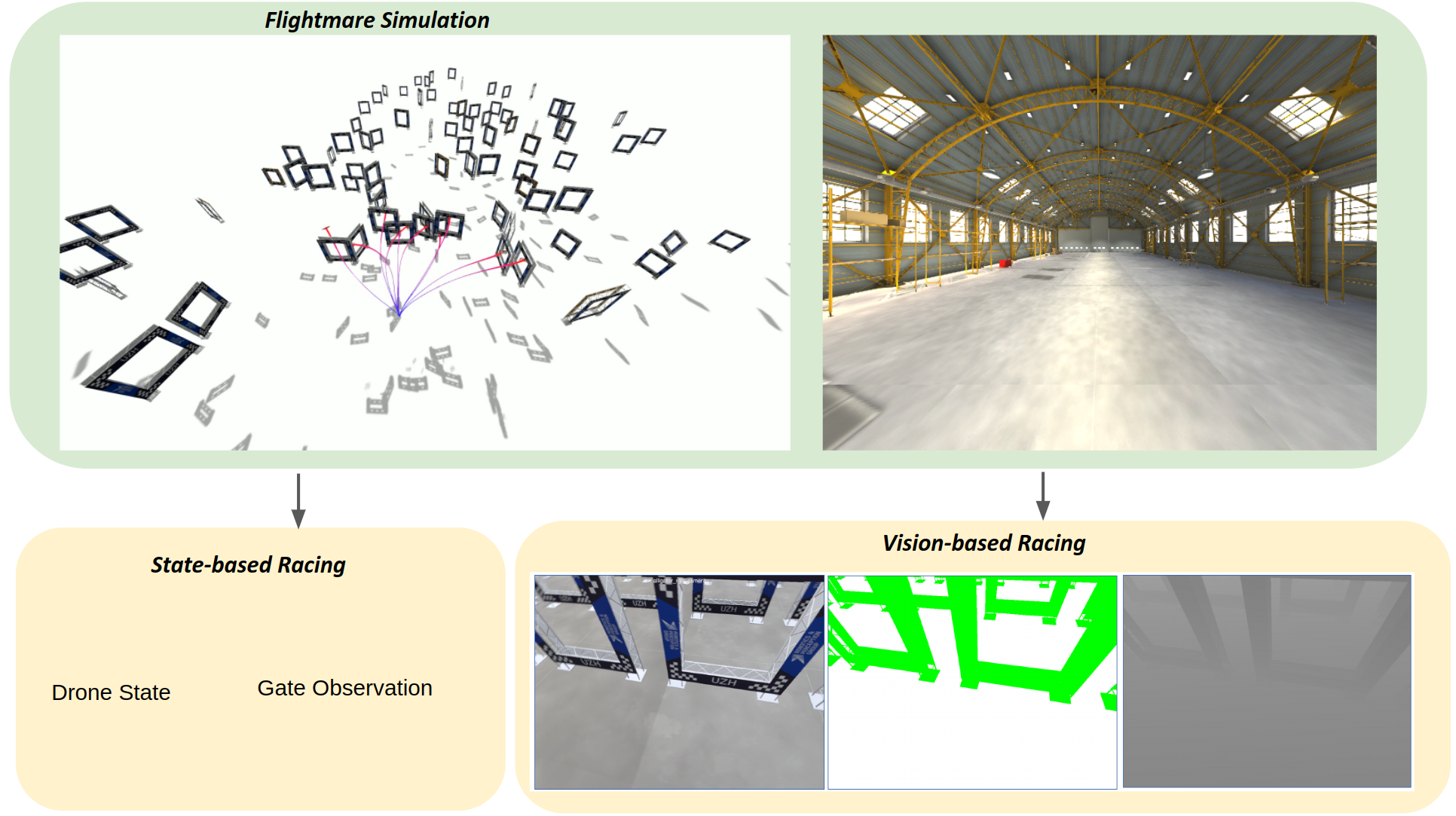

The task is to navigate a high-performance racing drone through a sequence of gates in minimum time. The competition will have two challenges: i) state-based drone racing and ii) vision-based drone racing. The state-based challenge aims at benchmarking algorithms for planning and control from the aspect of time-optimal flight and robustness against unknown effects, such as aerodynamics [7]. The vision-based challenge evaluates the entire system, particularly the perception. We provide an easy-to-use API to control the drone to lower the entry barrier. We will allow the participants to concentrate exclusively on the racing problem by abstracting away all the complexity of agile drone control. The competition will be hosted on our in-house simulator Flightmare [8].

State-based Drone racing

In the first challenge, participants focus on developing algorithms for planning and control in drone racing. We will provide the full information about the race track as well as the vehicle states. The task is to race the drone using the ground truth state information. However, the algorithm has to handle some disturbances, including unknown aerodynamic effects and uncertainty in track layouts.

Vision-based Drone racing

In the second challenge, participants are required to develop a fully autonomous system for vision-based drone racing. The observation space of the quadrotor includes RGB images, depth images, and the vehicle’s own states (position, velocity, velocity, acceleration, and body rates). The quadrotor can be controlled via different control models, including thrust control, collective thrust and body rates control, or velocity control. The competition organizers will provide the following infrastructure:

- Photorealistic drone racing environments [7, 8].

- Scripts to generate racing tracks randomly (for training) or to load given racing tracks (for evaluation) [1].

- Baseline drone racing algorithms, including OC-based and RL-based approaches [1-12].

- Parallelization tools (compatible with OpenAI gym) to launch batch training/evaluation on computer clusters and quickly evaluate a set of reinforcement learning pipelines.

Assessment

Participants need to submit the trajectories that are generated by their controller for evaluation. We will evaluate the trajectories by checking their (i) feasibility, indicating if the trajectory can be flown within the physical limit of the drone; (ii) success rate, measuring how many times the drone finishes the track without crashing; and (iii) lap time, measuring how fast the drone can finish the track. Additionally, participants are asked to upload a 1-page report where they cite the corresponding references (see Reference section, [1-12]).

References

- [1] Philipp Foehn et al. ["Agilicious: Open-source and open-hardware agile quadrotor for vision-based flight"] Science Robotics, 2022 [PDF]

- [2] Sihao Sun, Angel Romero, Philipp Foehn, Elia Kaufmann, Davide Scaramuzza ["A Comparative Study of Nonlinear MPC and Differential-Flatness-based Control for Quadrotor Agile Flight"] IEEE Transactions on Robotics, 2022 [PDF, Video]

- [3] Angel Romero, Sihao Sun, Philipp Foehn, Davide Scaramuzza ["Model Predictive Contouring Control for Time-Optimal Quadrotor Flight"] IEEE Transactions on Robotics, 2022 [PDF, Video]

- [4] Philipp Foehn, Angel Romero, Davide Scaramuzza, ["Time-Optimal Planning for Quadrotor Waypoint Flight"] Science Robotics, 2021 [PDF, Video]

- [5] Elia Kaufmann, Leonard Bauersfeld, Davide Scaramuzza ["A Benchmark Comparison of Learned Control Policies for Agile Quadrotor Flight"] ICRA, 2022 [PDF, Video]

- [6] Antonio Loquercio, Elia Kaufmann, Rene Ranftl, Mark Müller, Vladlen Koltun, Davide Scaramuzza, ["Learning High-Speed Flight in the Wild"], Science Robotics, 2021, [PDF, Video]

- [7] Leonard Bauersfeld, Elia Kaufmann, Philipp Foehn, Sihao Sun, Davide Scaramuzza, ["NeuroBEM: Hybrid Aerodynamic Quadrotor Model"], RSS: Robotics, Science, and Systems, 2021 [PDF, Video]

- [8] Yunlong Song, Selim Naji, Elia Kaufmann, Antonio Loquercio, and Davide Scaramuzza ["Flightmare: A flexible quadrotor simulator."] Conference on Robot Learning, 2021. [PDF, Video]

- [9] Elia Kaufmann, Antonio Loquercio, Rene Ranftl, Mark Müller, Vladlen Koltun, Davide Scaramuzza, ["Deep Drone Acrobatics"], RSS: Robotics, Science, and Systems, 2020 [PDF, Video]

- [10] Matthias Faessler, Antonio Franchi, Davide Scaramuzza, ["Differential Flatness of Quadrotor Dynamics Subject to Rotor Drag for Accurate Tracking of High-Speed Trajectories"], IEEE Robotics and Automation Letters, 2018 [PDF, Video]

- [11] Yunlong Song, and Davide Scaramuzza ["Policy Search for Model Predictive Control With Application to Agile Drone Flight."] IEEE Transactions on Robotics, 2021 [PDF, Video]

- [12] Drew Hanover, et al. ["Autonomous Drone Racing: A Survey"] arXiv, 2023 [PDF]