AEGNN: Asynchronous Event-based Graph Neural Networks

Code, Video and Paper

Introduction

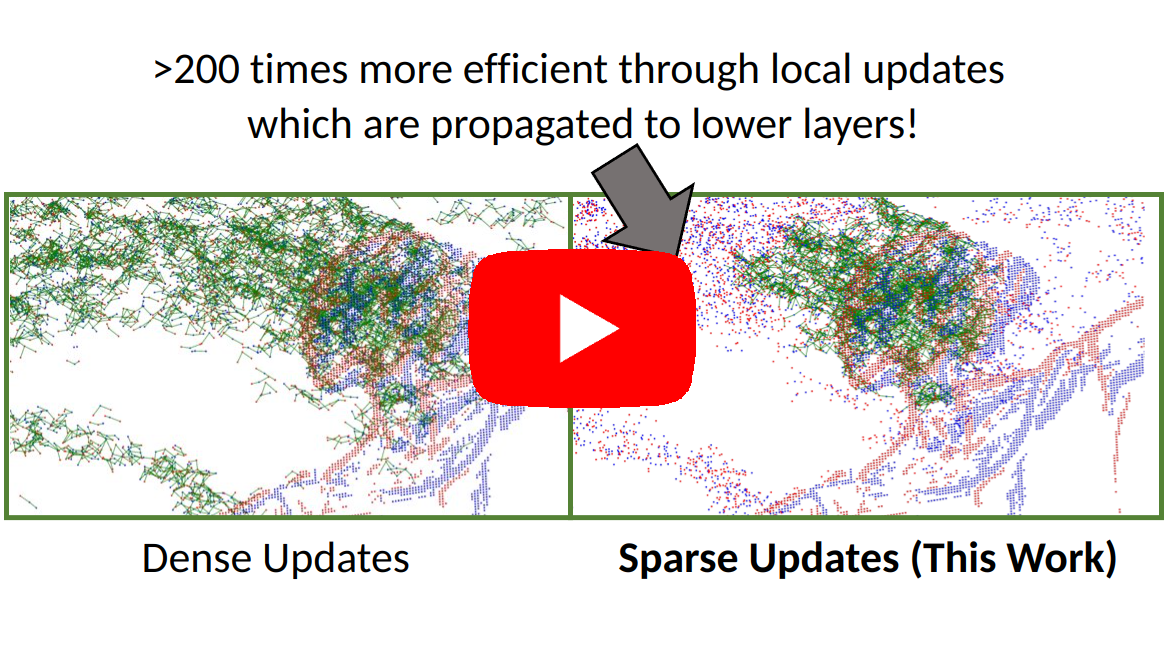

The best performing learning algorithms devised for event cameras work by first converting events into dense representations that are then processed using standard CNNs. However, these steps discard both the sparsity and high temporal resolution of events, leading to high computational burden and latency. For this reason, recent works have adopted Graph Neural Networks (GNNs), which process events as “static” spatio-temporal graphs, which are inherently ”sparse”. We take this trend one step further by introducing Asynchronous, Event-based Graph Neural Networks (AEGNNs), a novel event-processing paradigm that generalizes standard GNNs to process events as “evolving” spatio-temporal graphs. AEGNNs follow efficient update rules that restrict recomputation of network activations only to the nodes affected by each new event, thereby significantly reducing both computation and latency for event- by-event processing. AEGNNs are easily trained on synchronous inputs and can be converted to efficient, ”asynchronous” networks at test time. We thoroughly validate our method on object classification and detection tasks, where we show an up to a 200-fold reduction in computational complexity (FLOPs), with similar or even better performance than state-of-the-art asynchronous methods. This reduction in computation directly translates to an 8-fold reduction in computational latency when compared to standard GNNs, which opens the door to low-latency event- based processing.

Publication

If you use this code in a publication, please cite our paper. S. Schaefer*, D. Gehrig* and D. Scaramuzza, "AEGNN: Asynchronous Event-based Graph Neural Networks" , IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

@article{Schaefer21cvpr,

title={AEGNN: Asynchronous Event-based Graph Neural Networks},

author={Schaefer, Simon and Gehrig, Daniel and Scaramuzza, Davide},

journal={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}